Introduction

This report presents the results of a pretest and posttest of the monitoring and evaluation (M&E) concept training. The purpose of the test was to assess the level of knowledge and understanding of the participants on the basic concepts and tools of M&E before and after a training session. The report also compares the modules of the two tests and provides some recommendations for improvement.

Executive summary

In the realm of project management and evaluation, understanding statistical analysis is crucial. One common pitfall project managers often encounter is the reliance on binary classifications of ‘improved’ and ‘unimproved’ based solely on whether the difference between pretest and posttest proportions is greater than zero. This approach, while simple, can mask important nuances in the data and lead to inaccurate conclusions about the effectiveness of interventions or trainings.

A more robust approach is to use a disaggregated thematic analysis and to test the significance of the difference of the test scores. This allows for a more detailed examination of the data, helping to identify which topics or modules have shown significant improvement and which ones need more attention or reinforcement. By doing so, managers can gain a deeper understanding of the areas where the intervention or training is working well and those where it may need to be adjusted or enhanced. By being aware of these statistical pitfalls, managers can make more informed decisions and drive more effective outcomes in their projects. This approach underscores the importance of statistical literacy in project management and highlights the need for continuous learning and development in this area.

Methodology

Pretest and Posttest

These assessments were integral in gauging the participants’ knowledge levels before and after the training. Each test consisted of eight multiple-choice questions covering three core topics: understanding monitoring and evaluation, tools for project management, and data collection & analysis.

Paired Samples Test

Paired Samples T Test statistical method was employed to compare the means of two related groups to identify if there is a significant difference between pre-training and post-training scores.

Topics Covered

- Understanding Monitoring & Evaluation: Questions assessed participants’ foundational knowledge in M&E concepts.

- Tools for Project Management: Participants were tested on various tools essential for effective project management.

- Data Collection & Analysis: Questions evaluated understanding in gathering, analyzing, interpreting data.

Findings

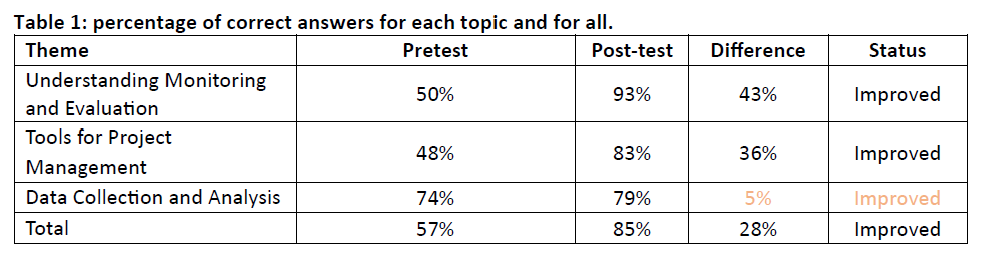

Results from Table 1 indicated an improvement in all three thematic areas as shown by increased post-test scores. However, it’s crucial for managers to understand that while improvements are evident, statistical significance must be established to validate these findings ensuring they are not attributed to random chance or variability but reflect genuine enhancements in knowledge or skills.

Understanding Monitoring and Evaluation

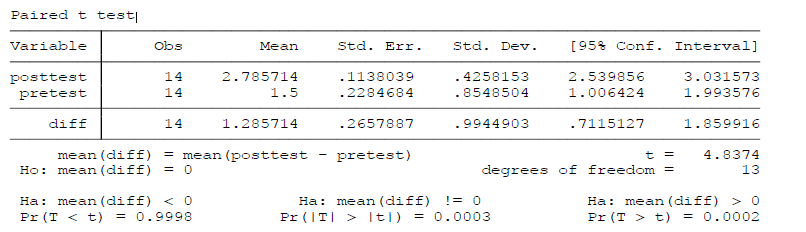

Table 2: Pretest and posttest scores of understanding monitoring and evaluation concepts

Table 2 indicates an improvement in understanding the concepts after some form of intervention or learning period. The hypothesis testing results at the bottom indicate that there is a significant difference between pretest and posttest scores. This suggests that the intervention or learning period was effective in improving understanding of monitoring and evaluation concepts.

Tools for Project Management

Table 3: Pretest and posttest scores of familiarizing tools for Project Management

The paired t-test results presented in table 3 indicate a significant improvement in scores from the pretest to the posttest for familiarizing tools for Project Management, suggesting that the training or intervention between the pretest and posttest was effective. The p-value is less than 0.05, further confirming the statistical significance of the improvement.

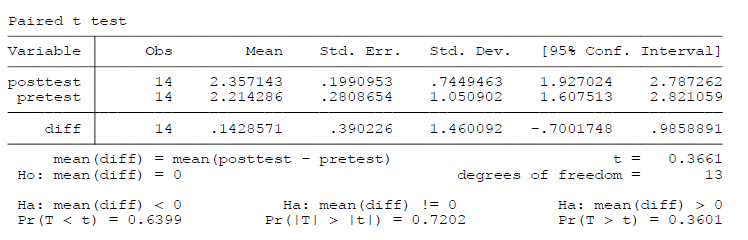

Data Collection and Analysis

Table 4: Pretest and posttest scores of methods of data collection and analysis

The result of this table of a paired t-test for the posttest and pretest scores in the Data Collection & Analysis thematic area, while there is an observed improvement in scores in the Data Collection & Analysis thematic area, the paired t-test results indicate that this improvement is not statistically significant. Therefore, we can’t confidently attribute this improvement to the training or intervention rather than random chance or variability. This aligns with the statement about the importance of establishing statistical significance to validate findings.

Conclusions and recommendations

Based on the findings of this paperwork, we can draw some recommendations and conclusions for future M&E trainings and assessments.

- Instead of using a binary classification of improved and unimproved based on whether the difference of the pretest and posttest proportions is greater than 0, it is better to use a disaggregated thematic analysis and to test the significance of the difference of the test scores. This will allow us to identify which topics or modules have shown significant improvement and which ones need more attention or reinforcement.

- The results suggest that the training was effective in enhancing the participants’ knowledge and understanding of monitoring and evaluation concepts and tools for project management, as evidenced by the significant improvement in the posttest scores for these topics. Therefore, we recommend that the training content and delivery for these topics be maintained or replicated for future trainings.

- However, the results also indicate that the training did not have a significant impact on the participants’ knowledge and understanding of data collection and analysis methods, as the improvement in the posttest scores for this topic was not statistically significant. Therefore, it is recommended that the training content and delivery for this topic be reviewed and revised to address the gaps or challenges that may have hindered the learning outcomes. Some possible strategies include providing more practical examples and exercises, using more interactive and engaging methods, and offering more feedback and guidance.

Reference

- Ross, A., & Willson, V. L. (2017). Paired samples T-test. In Basic and advanced statistical tests (pp. 17-19). Brill.

- Temkin, N. R., Heaton, R. K., Grant, I., & Dikmen, S. S. (1999). Detecting significant change in neuropsychological test performance: A comparison of four models. Journal of the International Neuropsychological Society, 5(4), 357-369.

Written by Muhiyadin Aden

R-DATS Consultant with expertise in research, data management, statistical analysis and modeling